Combining our insights from the research and prototyping stages, we present AdaptiveWatch- a future-focused TV viewing experience we envision would be integrated as a togglable feature across all TV streaming platforms. AdaptiveWatch utilizes user-purchased sensors, such as smartwatches, cameras, and smart-speakers to extract user’s emotion, attention, and personal viewing habits. Using that information, it will leverage Deep Learning models to synthetically adapt the TV content in real time based on the user’s context, thereby seamlessly and dynamically adjusting the TV content to meet the user’s emotional, cognitive, and personal viewing needs.

AdaptiveWatch uses real-time stylistic changes to the plot-line, weather, music, and color grading to adapt the content according to the user's emotion and personal viewing preferences to enhance the TV viewing experience.

Our previous research found that people have a desire to immerse themselves in virtual environments based on their mood or current emotional state. Whether the user’s goal is to wallow in their sadness or counteract it with something happy, AdaptiveWatch will be able to adapt the plotline and the scene styles through Deep Learning to better the TV viewing experiences. AdaptiveWatch references literature and psychology research to map certain color changes, music changes, and weather changes to human emotions. In addition, by tracking user’s historical viewing preferences, AdaptiveWatch leverages Deep Learning to synthetically alter the plotline. It will either incorporate narratives and features that the user has historically enjoyed, or censor and remove narratives that the user may dislike based on prior viewing experiences. Play with our interactive prototypes below to see how AdaptiveWatch can be integrated with existing TV streaming services.

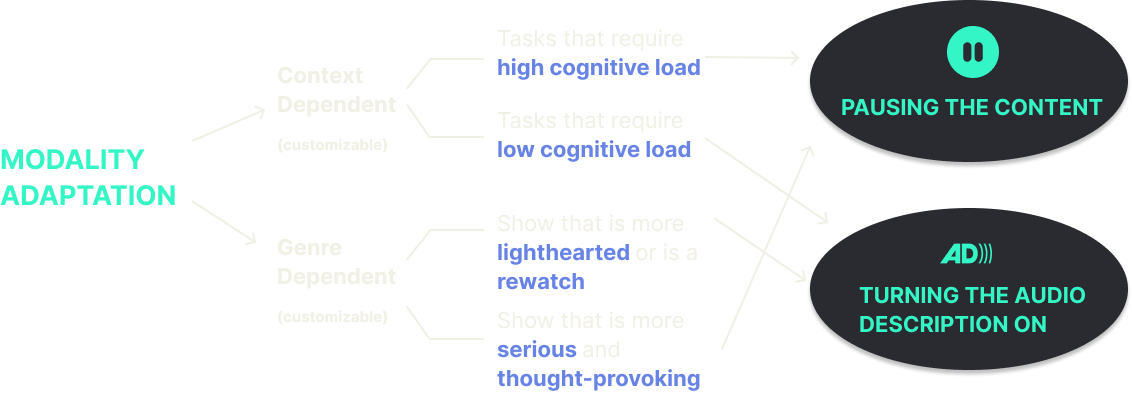

Growing numbers of users have begun to think of TV as a secondary, or background activity. Part of what makes it so appealing is that it requires no active input once you get started watching. However, there are times when might need to manually pause the show in order to reply to a message or check on food in the kitchen. Our system detects your activity automatically while you are watching tv and adapts the content accordingly, either pausing or changing to audio narration format, so you never miss any part of the show.

Personal data privacy concerns came up several times in our user study and the majority of the users want to be in the driver’s seat to determine when and how the content would be adapted. On the other hand, we realized users also expressed frustrations when manually inputting their emotions into the system, as it distracted them from the TV viewing experience, and required them to consciously think about how they felt at pivotal moments in the TV content.

To resolve these conflicting pain points users have expressed, we envision a future where users will feel more comfortable adopting emerging and existing smart technologies to track their emotions. However, to ensure that TV users are comfortable during the early stages of adopting AdaptiveWatch, we have provided users with manual controls that they can use to dictate their TV viewing experience. With our AdaptiveWatch system, users can toggle on and off the system whenever they want to during their viewing session, so they can enjoy contents from the artist’s original intention or personalized versions tailored to their preferences.

User’s historical viewing data has also been integral towards personalizing the TV viewing experience. Prior prototyping suggested that strictly looking at user’s emotions and attention was insufficient, because different users liked and disliked different aspects of the show, ranging from the visual elements of the TV content to specific elements in the plot and narratives. To further personalize the TV viewing experience, AdaptiveWatch will leverage user’s historic viewing data to track what shows they have enjoyed or disliked, and how they have reacted emotionally to certain shows. Using that data, AdaptiveWatch will further adapt and tailor the TV content synthetically to their personal viewing preferences. To address data privacy concerns, AdaptiveWatch will stored the data safely on a local system that is dedicated to enhancing user’s TV viewing experience, and users will be able to control when and how these data can be used for their viewing experience.

We put a spin on the classic European fairy tale Little Red Riding Hood. The narrative that we all know- a little young girl who visited her grandmother in the woods but they both got eaten by a wolf, and then were rescued by a hunter passing by- is the base narrative that we have. On top of the base narrative, we created three other narratives which are sad, horror, and happy in order to simulate on-the-fly plot adaptation based on users’ emotional inputs. Aside from the plot-line adaption, we have also incorporated stylistic changes including weather, background music, and colors to see which change(s) users prefer to have during their viewing session.